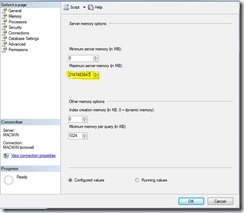

By default, SQL Server’s max memory is 2147483647. With this default setting SQL Server will “Commit” as much memory as possible until there’s none left for the operating system to run efficiently.

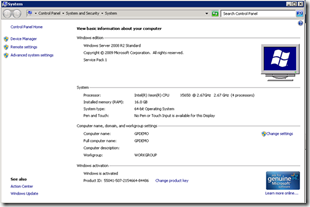

If the operating system has no memory available, it will start using the page file instead of RAM. Using the page file in place of memory will result in poor system performance.

SQL Server really does need as much memory as possible. However, that doesn’t mean we should leave SQL Server’s max memory set to unlimited. If we starve the OS for memory, then Windows itself may not get the memory it needs.

To Fix the Problem

Set the Max Memory that SQL Server can use in your environment. To change your SQL Server Memory:

1. Log into SSMS

2. Right click on the instance name and navigate to the bottom of the menu and click on “Properties”

3. Change the Max Memory settings to allow room for efficient operating system performance so it doesn’t use the page file in place of RAM. For recommendations on SQL Server Max Memory settings check out Glenn Berry’s Suggested Max Memory Settings.

4. Click “Save” button and restart SQL Services for changes to take effect.

Until next time, happy SQL Server performance tuning!